Architecture

PostgreSQL cluster architecture and concept

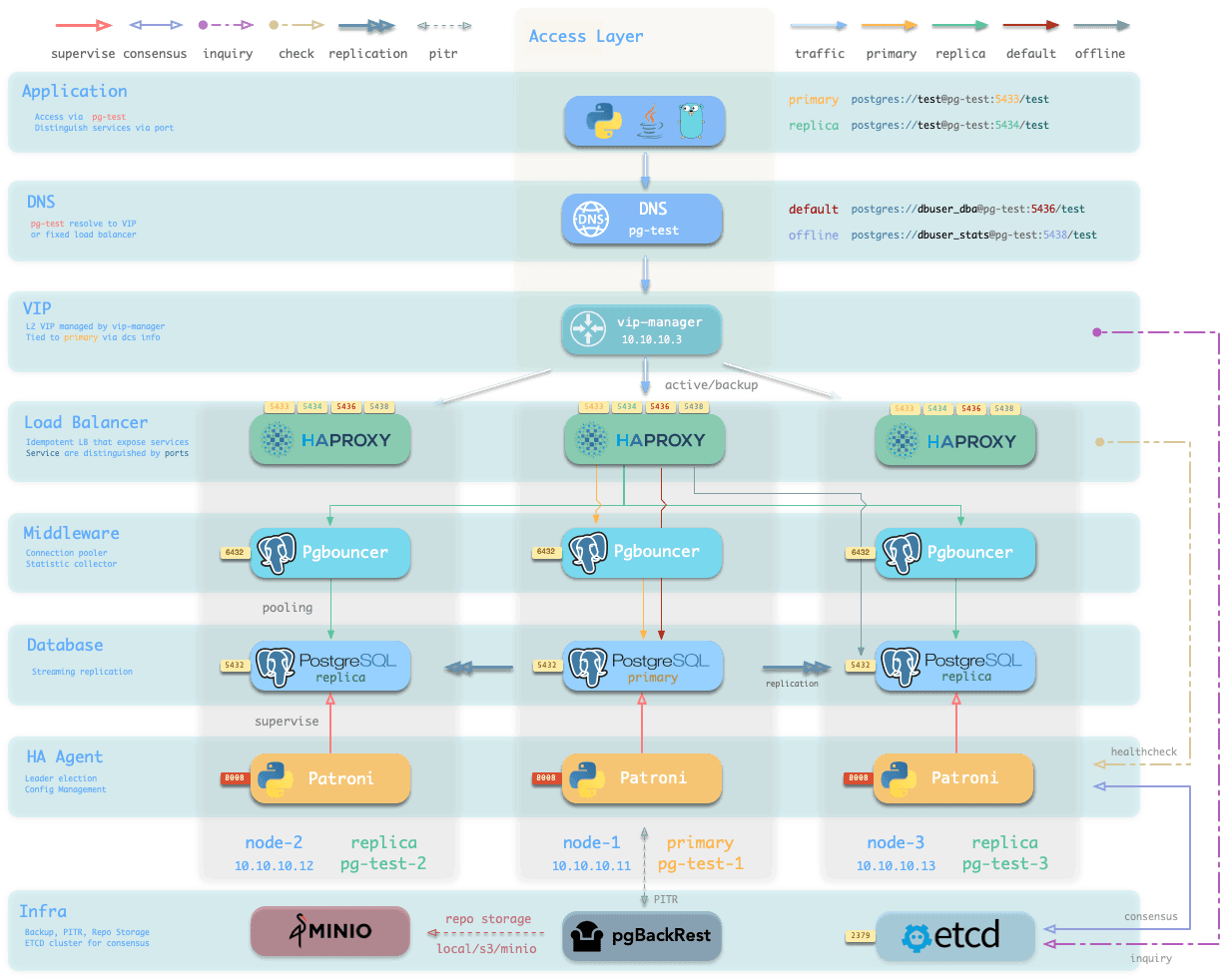

Entity-Relationships

There are four types of core entities in Pigsty’s PGSQL module:

- Cluster: An autonomous PostgreSQL business unit, the top-level namespace for other entities.

- Service: An abstraction of cluster ability, traffic routes, and expose services via different node ports.

- Instance: A postgres server which consists of a group of running processes & files on a single node.

- Node: An abstraction of hardware resources, which can be bare metal, virtual machine, or k8s pods.

Architecture

Here's a PostgreSQL Cluster pg-test described in the config inventory:

pg-test:

hosts:

10.10.10.11: { pg_seq: 1, pg_role: primary }

10.10.10.12: { pg_seq: 2, pg_role: replica }

10.10.10.13: { pg_seq: 3, pg_role: replica }

vars:

pg_cluster: pg-test

It defines a HA PostgreSQL cluster looks like the above, and here are related entities in this cluster:

- 1 PostgreSQL cluster:

pg-test - 2 Instance Roles:

primary&replica - 3 PostgreSQL Instances:

pg-test-1,pg-test-2,pg-test-3 - 3 Nodes:

10.10.10.11,10.10.10.12,10.10.10.13 - 4 PostgreSQL Services, auto generated by default:

pg-test-primary: Read-Write Service (route to primary pgbouncer)pg-test-replica: Read-Only Service (route to replicas pgbouncer)pg-test-default: Direct RW Service (route to primary postgres)pg-test-offline: Offline Read Service (route to dedicated postgres)

HA Description

The PostreSQL cluster is managed by Patroni, which is a battle-tested HA solution for PostgreSQL. It will setup PG Replication on multiple nodes, and perform automatic failover when the primary node is down.

The backup is handled by pgBackRest, which is a powerful backup tool for PostgreSQL, which supports incremental backup/restore, compression, encryption, backup to local disk or S3 / MinIO.

The pgbouncer is a lightweight connection pooler which can increase the performance with high-concurrency. It is 1:1 deployed with the Postgres server and used by primary / replica services by default.

The services are exposed by HAProxy, which is a high-performance TCP/HTTP load balancer, it's part of NODE module. And 4 default services are auto exposed in an idempotent way on all cluster nodes.

The application can visit any of the haproxy to access the Postgres cluster, and the traffic will be routed to the correct instance based on patroni health check endpoints. So failover is transparent to the apps.

The patroni requires a functioning ETCD in your deployment, and pgbackrest can use the optional MinIO as centralized backup storage; and monitoring exporters will collect metrics & logs into the Infra module.

Components

The PGSQL Node consists of the following components (some can be disabled)

| Component | Port | Description |

|---|---|---|

postgres | 5432 | PostgreSQL Server Process Managed by Patroni |

pgbouncer | 6432 | Pgbouncer Connection Pool |

pgbackrest | - | Backup and point-in-time-recovery tools |

patroni | 8008 | Patroni HA Component, Manage postgres |

primary @ haproxy | 5433 | Primary connection pool: Read/Write Service |

replica @ haproxy | 5434 | Replica connection pool: Read-only Service |

default @ haproxy | 5436 | Primary Direct Connect Service |

offline @ haproxy | 5438 | Offline Direct Connect: Offline Read Service |

pg_exporter | 9630 | postgres Monitoring Metrics Exporter |

pgbouncer_exporter | 9631 | pgbouncer Monitoring Metrics Exporter |

pgbackrest_exporter | 9854 | pgbackrest Monitoring Metrics Exporter |

vip-manager | - | Bind VIP to the primary |

Interaction

Meanwhile, the Infra Node consists of the following components which interact with PGSQL.

| Component | Port | Domain | Description |

|---|---|---|---|

nginx | 80 | h.pigsty | Web Service Portal (YUM/APT Repo) |

alertmanager | 9093 | a.pigsty | Alert Aggregation and delivery |

prometheus | 9090 | p.pigsty | Monitoring Time Series Database |

grafana | 3000 | g.pigsty | Visualization Platform |

lok | 3100 | - | Logging Collection Server |

pushgateway | 9091 | - | Collect One-Time Job Metrics |

blackbox_exporter | 9115 | - | Blackbox Probing |

dnsmasq | 53 | - | DNS Server |

chronyd | 123 | - | NTP Time Server |

ansible | - | - | Run playbooks |

- Cluster DNS is resolved by DNSMASQ on infra nodes

- Cluster VIP is manged by

vip-manager, which bind to cluster primary.vip-managerwill acquire cluster leader info written bypatronifrometcdcluster directly

- Cluster services are exposed by Haproxy on nodes, services are distinguished by node ports (

543x).- Haproxy port 9101: monitoring metrics & stats & admin page

- Haproxy port 5433: default service that routes to primary pgbouncer: primary

- Haproxy port 5434: default service that routes to replica pgbouncer: replica

- Haproxy port 5436: default service that routes to primary postgres: default

- Haproxy port 5438: default service that routes to offline postgres: offline

- HAProxy will route traffic based on health check information provided by

patroni.

- Pgbouncer is a connection pool middleware that buffers connections, exposes extra metrics, and brings extra flexibility @ port 6432

- Pgbouncer is stateless and deployed with the Postgres server in a 1:1 manner through a local unix socket.

- Production traffic (Primary/Replica) will go through pgbouncer by default (can be skipped by

pg_default_service_dest) - Default/Offline service will always bypass pgbouncer and connect to target Postgres directly.

- Postgres provides relational database services @ port 5432

- Install PGSQL module on multiple nodes will automatically form a HA cluster based on streaming replication

- PostgreSQL is supervised by

patroniby default. - Patroni will supervise PostgreSQL server @ port 8008 by default

- Patroni spawn postgres servers as the child process

- Patroni uses

etcdas DCS: config storage, failure detection, and leader election. - Patroni will provide Postgres information through a health check, used by HAProxy

- Patroni metrics will be scraped by prometheus on infra nodes

- PG Exporter will expose postgres metrics @ port 9630

- Pgbouncer Exporter will expose pgbouncer metrics @ port 9631

- Pgbouncer’s metrics will be scraped by prometheus on infra nodes

- pgBackRest will work on the local repo by default (

pgbackrest_method)- If

local(default) is used as the backup repo, pgBackRest will create local repo under the primary’spg_fs_bkup - If

miniois used as the backup repo, pgBackRest will create the repo on the dedicated MinIO cluster inpgbackrest_repo.minio

- If

- Postgres-related logs (postgres,pgbouncer,patroni,pgbackrest) are exposed by promtail @ port 9080

- Promtail will send logs to Loki on infra node

Full ER Diagram

There is one config inventory file and one infra corresponding to a Pigsty deployment. And there may have multiple database clusters in a Pigsty deployment.

A Cluster / Instance may have multiple Databases, and Databases contain Tables and other Objects (Query, Index, Function, Seq, ...).